Note - this article was originally posted as a Thalesgroup blog on August 3, 2023 under the title "Cloud Key Management Solution for Azure, Azure Stack and M365." The video was added for this post.

Uncover Your Cybersecurity Blind Spots

Cybersecurity is a strategic risk that should be managed at the highest levels of an organization. In fact, the World Economic Forum’s Global Risk Report 2023 again ranked wide-spread cybercrime as a top-ten critical global threat. Of all the potential global risks to economies and societies including natural disasters, geopolitical conflict, energy supply, global debt and rising inflation, widespread cybercrime ranks #8 on both short term and long-term outlooks.

Ranking in the top ten critical global threats is eye-opening! To help mitigate the risk and unshroud organizational blind spots, today’s enterprises must look for leading-edge solutions that help with data governance and compliance.

Thales Solutions for Microsoft Azure, Azure Stack and M365

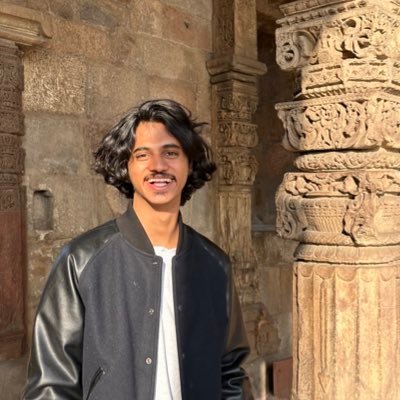

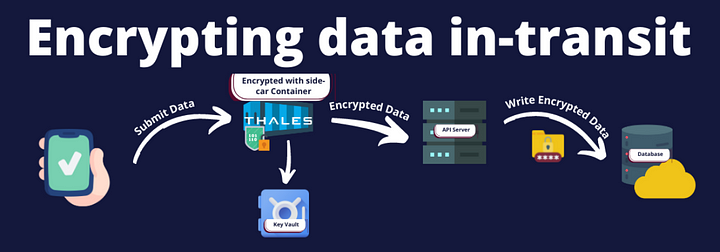

You can simplify the way your organization discovers, protects, and controls your sensitive data. With our platform, Thales has integrated the CipherTrust Cloud Key Management (CCKM) solution with Microsoft Azure, Azure Stack and Microsoft 365.

CCKM reduces your operational burden and increases efficiency. CCKM manages and synchronizes native keys, even if you have already created thousands of native cloud keys at your cloud provider. CCKM can help customers demonstrate compliance with internal, industry and national regulatory requirements so that you have the confidence to unblock sensitive workloads that may be stuck on-premises and move them to Azure.

CCKM Benefits:

- Simplify compliance by taking control of your encryption keys and your data

- Achieve cost savings using automated key lifecycle management

- Single pane of glass to help eliminate security holes introduced by human error -- set policies to be applied consistently wherever data is stored

- Support strategies for workload portability to increase operational resilience as part of a robust business continuity and disaster recovery plan

- Support all major public clouds

- Flexible deployment options: on-premises, hybrid cloud, and as a Service

“Thales is a global Microsoft partner focused on delivering solutions for Azure Cloud, Azure Stack and M365, on-premises storage systems, intelligent edge appliances, and cloud-based Microsoft Azure Services. They are working to help customers transform their businesses to drive digital transformation for people, organizations, and industries worldwide. CipherTrust Cloud Key Management has been verified against Microsoft key products, is available on the Azure Marketplace and is simple to adopt for Azure customers.” – David Nunez Tejerina, Principal Product Manager, Microsoft

Bring Your Own Key

With Thales’ Bring Your Own Key (BYOK) functionality, customers can maintain control of sensitive data using external key management services ensuring full encryption capabilities, key lifecycle management, and centralized key management across clouds, regions, accounts, subscriptions, projects, applications, org ids and more. CCKM helps manage native Azure keys, Standard/Premium Key Vaults as well as Managed HSM pools, in addition to BYOK. CipherTrust Manager as well as Luna Network HSM can be used as a key source.

Single Pane of Glass, Single Vendor

According to the Thales 2023 Data Threat Report, 93% of organizations use four or more key management solutions (includes enterprise key manager vendors and cloud provider key managers). CCKM manages all of your encryption keys across clouds and services with a single pane of glass from a trusted vendor.

CCKM integrated with Microsoft Azure, Azure Stack and Microsoft 365 increases efficiency by reducing the operational burden. Giving customers lifecycle control, centralized management within and among clouds, and unparalleled visibility of cloud encryption keys reduces key management complexity and operational costs. Thousands of keys and native key stores are difficult to manage manually, and organizations may be stretched to consistently apply key lifecycle management policies such as rotation, backup and role-based access management across their entire digital estate -- leading to security holes and failed audits. 99% of data breaches occur because of human error. You can shrink the threat surface introduced by human error when you use centralized automated key lifecycle management provided by CCKM.

Multi-Cloud Support

Organizations with multi-cloud are struggling to protect their sensitive data, because while cloud delivers a multitude of benefits these can often be offset by a multitude of challenges. It can also be very time consuming to manage the different native stores and native key management tools across different clouds and on-premises since there is no industry standard. Based on customer testimonials, CCKM can provide a 30x savings in time and cost in managing thousands of native key stores across hybrid multi-cloud environments which can free up IT teams to focus on other urgent business priorities. Operational Sovereignty

CCKM helps organizations to control their digital sovereignty across major public and government clouds including Microsoft Azure, Azure Government, Amazon Web Services, Google Cloud, Oracle Cloud, Salesforce and SAP. The solution enables you to run in different environments to support a strong business continuity plan. CCKM can provide an organization with a holistic view of where all key workloads and sensitive data are located.

Free Trial Try Data Protection On Demand - 30-Day Free Evaluation!

For more information see the Product Brief and Solution Brief.

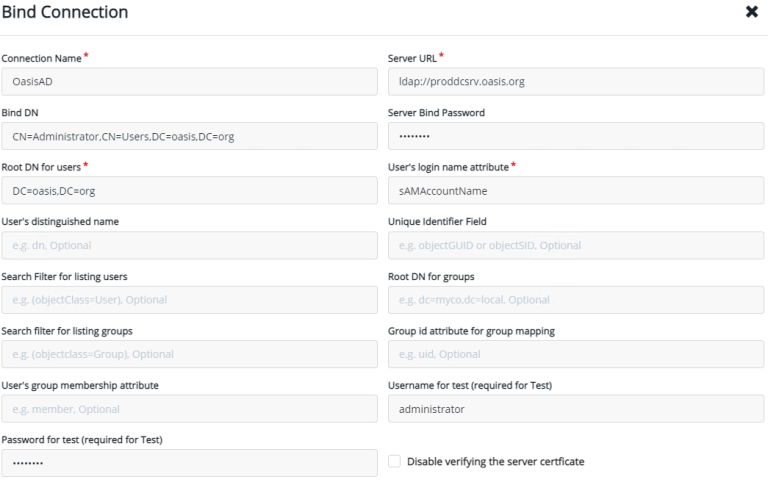

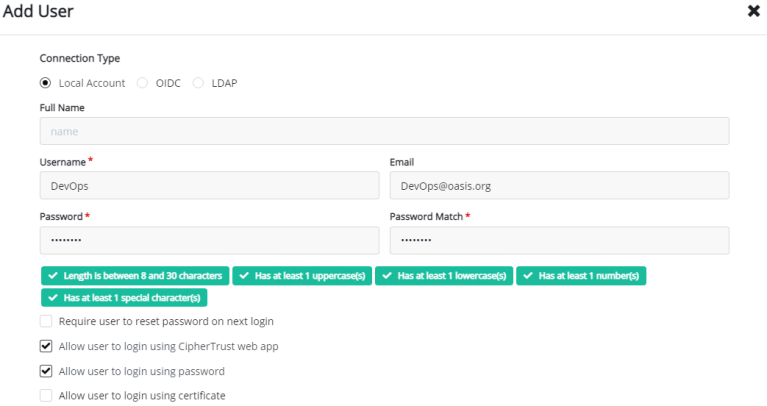

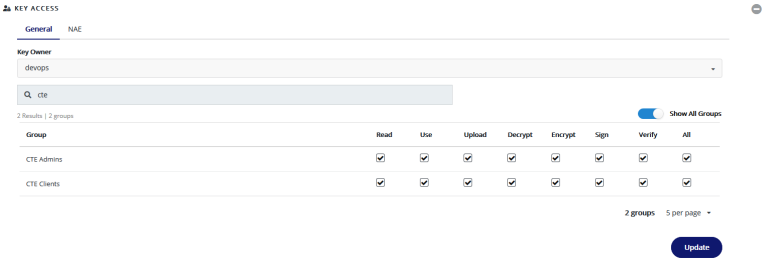

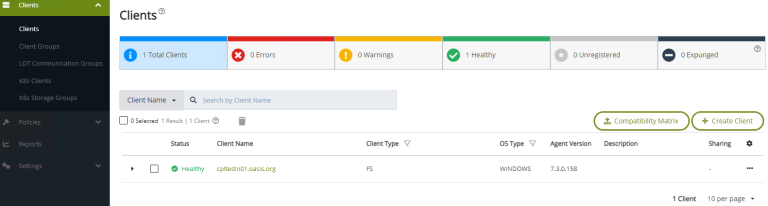

Why should you integrate Thales CipherTrust with your Microsoft Active Directory? What are the benefits of integration, and how is it done? Does Thales CipherTrust Manager (CTM) replace your Active Directory Group policy?

Why should you integrate Thales CipherTrust with your Microsoft Active Directory? What are the benefits of integration, and how is it done? Does Thales CipherTrust Manager (CTM) replace your Active Directory Group policy?